A potential benefit of GDPR is that, hate it or not, it gets people discussing privacy and what that means to them. Gone are the days of carelessness oversharing on the Internet. We, as consumers and data collectors, are forced to care and be accountable for the data in use. These laws are the first real push to protect individuals that have been victimized countless times through breaches, marketing, and other services.

With an increasing amount of breaches to sites, it’s no longer shocking or newsworthy when your data is gone. Yes, you should be notified, but is every breach really noteworthy? These are just a few examples of the many many breaches in 2018.

- Under Armour lost usernames, email addresses, and passwords of 150 million users in their Fitness application.

- Facebook lost records for 87 million users with a third party firm through Cambridge Analytica, and even more accounts were exposed via the View As breach later in the year.

- Panera leaked 37 million records in plaintext for attackers to scrape.

- Ticketfly lost names, addresses, email addresses, and phone numbers for 27 million accounts.

- Sacramento Bee newspaper lost 19.5 million subscriber records.

- PumpUp exposed data about customers, including communication between customers for 6 million users.

It’s now so common, it’s expected and we should be discussing it as though it’s going to continue to be.

Let’s discuss some of the consumer rights defined in the regulation. (For more information, this site organized the articles really well: https://gdpr-info.eu/)

- Educated consent – The consumer is informed in “clear and plain language.” Consent to collect can be withdrawn at any time. We as security people should be excited to help the general public understand this technology and help educate individuals so that they can make the decision to withdraw or not.

- Correction – Consumers now have the right to make changes to inaccurate data about themselves. This is huge, accuracy is paramount.

- Data portability – The consumer has the right to transfer their personal data from one electronic processing system to another. Imagine making personal data decisions and determining which company you’d prefer to patronize by their ability to protect your data.

- Erasure – Consumers have the right to withdraw consent and ask for personal data to be deleted. How often have you changed your mind and tried to back out of a program? More companies today are moving to the subscription model, canceling and removing yourself from their system is often obnoxious as a deterrent from cancelling. Now users have the right to completely erase their presence.

- Access – Consumers have the right to know what data is being collected and how it’s being processed.

These rights are great, but for companies to really comply, our companies have to be educated, themselves. We should know how to describe things clearly and plainly. We should be able to accurately portray the level of risk we expect consumers to accept by doing business with us. Data is easily mishandled, these laws force us to know where the data is, how it’s stored, transferred, and processed. It’s amazing to keep that sort of accountability, if a consumer wants to correct something, a company has to be able to identify the sources of this information and correct it! Finally, companies are forced to follow their own data and how it’s processed!

So how do we do this??

We have to start by conducting our own assessments when processing sensitive data that may result in risks to consumers. Identifying these avenues initially may be a project in itself, but this also forces companies to consider the data of every new process and project they implement. Project managers are forced to ask “Are we encrypting the data we send to third parties?” and “Who owns the keys to the datastore for our new cloud technology?” Records of each processing activity should be accounted for, including the types of data, time limits, and whether it’s being exported to third parties or not. The goal here is that only authorized individuals can access the data, as needed, and that an audit trail exists to prove that access. The principle of least privilege is a concept we all know and love, (and for those of you who are unfamiliar, the concept of, at every facet, only allowing access to information and resources that are necessary for legitimate purposes) but now this law forces this concept upon an individual’s data.

To educate ourselves further, consider all of the artifacts collected about a device when you go to a website. Searching for things on Google, existing on Facebook, tying it to your phone, opening up location data, etc. Privacy on the Internet is often coupled with discussions of anonymity and attribution. With these artifacts collected, how anonymous are you? If the service you are using is free, you and your content are the interesting data to capitalize on. What is collected about you is potentially sold to others, used to determine life patterns and preferences all to better “know” you without you necessarily, specifically, stating it. Consider all the times Amazon gives you spot-on suggestions for your next purchase. How much can a site gather about you?

Forensic investigators put together attackers habits all the time. You as a consumer are very important to understanding the market and for the market to understand your weaknesses.

Am I Unique? is a research site that attempts to identify your online footprint based off of your operating system, browser and browser plug-ins, etc. You can see just how unique and not “anonymous” you really are: https://amiunique.org This is just the technical data that leaks from your browsing experience, imagine also your behaviours that are tied with it.

So what’s considered sensitive? We’re used to what PCI considers as sensitive but GDPR now includes even more data to keep protected. They specifically list the following:

-

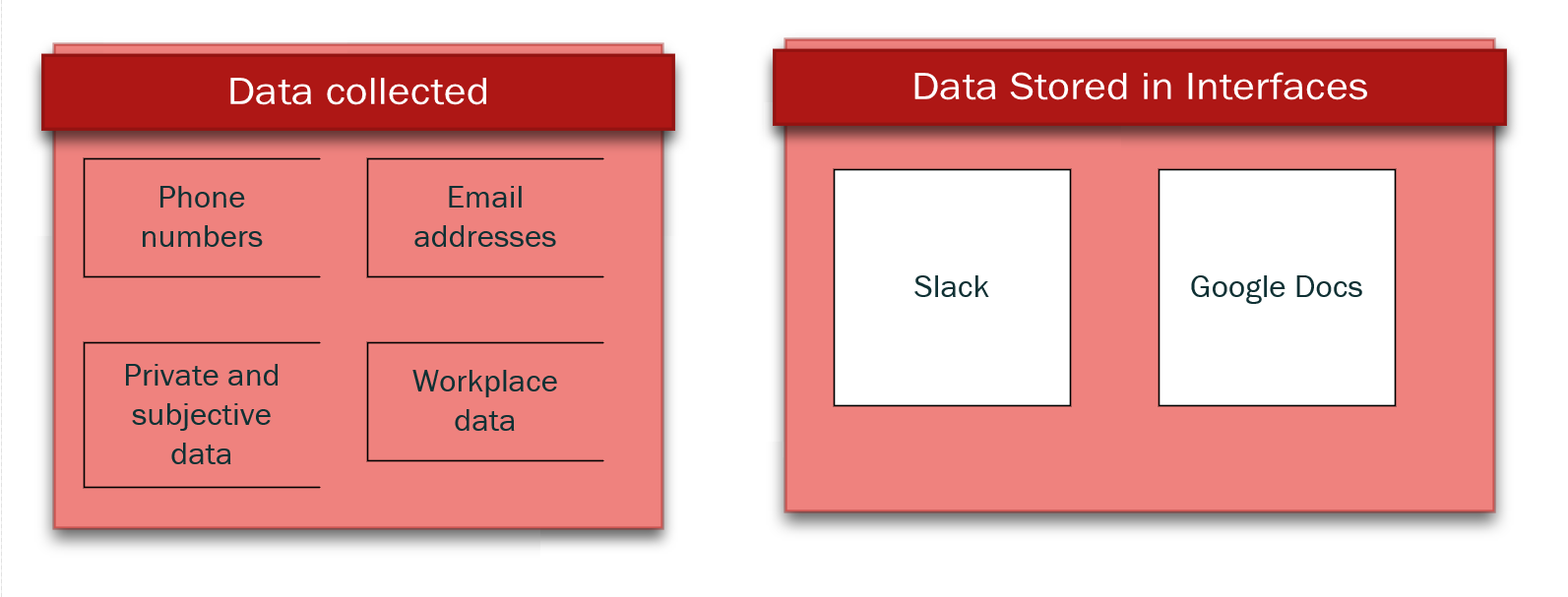

- Biographical information or current living situation, including dates of birth, SSN, phone numbers, and email addresses. We all are used to considering our SSN as sensitive, but now that our phones and email addresses are tied so closely to individuals, they need to be considered sensitive, too. Just think of how many sites require your birthday, phone number or email address?

- Looks, appearance and behaviour, including eye colour, weight and character traits. This data can likely be found about you by simply looking at your pictures. TV shows and companies have required individuals to sign a waiver to show their face in marketing, etc. This now forces individuals and companies to consider even more of what they’re capturing.

- Workplace data and information about education, including salary, tax information and student numbers.

- Private and subjective data, including religion, political opinions and geo-tracking data.

- Health, sickness and genetics, including medical history, genetic data and information about sick leave. This data that can potentially increase your insurance rates or otherwise be used against you to cause physical harm.

Whenever this data exists within your infrastructure, consider (for all, not just those in the EU) where that data lives and who it is transmitted to.

How do we evaluate our network and provide clear use of data we collected?

Your assessment should be tied closely to your business disaster recovery program as well as your corporate risk register. Does your call center data get sent to a 3rd party for services? Does your PCI data get processed somewhere else? Are your clients within your CRM data stored offsite? You should know where this data is, its importance to your company, and how to mitigate threats involved with sharing it. Remember to ask yourself with every new point in place, how, if at all, this landscape changes.

Create a network diagram or a mind map to help picture the process flow of consumer data within our network. Consider a phishing campaign:

If you often pass or handle information about customers in Slack or email, consider the implications of a breach in Slack’s infrastructure. Determine each use case and map out which pieces of your infrastructure it touches, how it’s stored, and where it gets sent to. Consider two age-old security concepts, data in transit and data at rest. We focus on encryption at each of these stages to help protect our data.

Each part of the standard has varying levels of difficulty. Some things may require you to document and consider all use cases. Some items may require a change in technical controls (retention policies) and others in behavioral modifications (storing in the proper document repository instead of on your local drive).

It’s important also to recognize what the “right” thing is for handling customer data and to make sure that keeping that data secure is the easiest thing for your internal employees to do. Your processes should be simple, the right way shouldn’t also be the hardest way to accomplish proper data sharing. You don’t want to only include the controls you put on a server side without also considering the work-arounds people will create to be more functional in their own job.

Attempt to make these processes better, through clear design and educating employees of proper uses of this data.

Who? You, your IT staff, security, legal, HR, call center reps, everyone who interfaces with a customer or sensitive data should be on board and understand the company’s stance, the importance of privacy and how that changes their daily/quarterly/annual processes.