After nine tutorials, sixteen posts on stack overflow, and several hours or workweeks of effort you’ve finally done it. You’ve finally got something in Amazon Web Services (AWS) to work as expected. It could have been something as simple as a static hosted site, or as complicated as a massive blockchain distributed machine learning web application, complete with mobile app. All without having a data center next you.

This is cloud computing at it’s finest, but with great power comes great responsibility. Many of the AWS security incidents within the last three years have occurred because of user misconfigurations. Organizations like Time Warner, Accenture, Verizon, WWE, Dow Jones, and GoDaddy have each unwittingly leaked thousands, if not millions of records of confidential customer data to the public.

So what can we learn from events like this? First, do not make an S3 bucket public unless you absolutely need to. Second, follow the Principle of Least Privilege for all of your AWS users, roles and policies. Each IAM policy you create should only allow a given entity to do its job and nothing else. That sounds great and all, but the crux of the matter is HOW one can do this?

We’ve already covered some basic steps on Security Misconfigurations and Network Checkups for on-prem resources, but what about security configurations for the cloud? If you’re running resources on AWS, you don’t have to worry about many of the day to day management of the resources . However, doing assessments of AWS resources for things like a penetration test can be complicated. In this post, we will be covering 3 ways to tame and cultivate the messy jungle that is your AWS environment.

Lockdown permissions

One of the best ways to secure your AWS environment is to restrict permissions to a specific resource. Let’s say for example you’re backing up company HR information in S3. You’ve set up default encryption on all objects within the bucket, and you use CloudTrail and set up alerts to whenever users try to access objects. Good stuff, but let’s say you have a team of developers on staff that need to work on S3. An inattentive project manager may just give the developers FullS3Access permission. Now all the company information stored in S3 is available to everyone will FullS3Access, which means that those developers would have access to things like payroll information. All of the other controls don’t matter, a simple user misconfiguration compromised the confidentiality of the data.

This is where tools like the AWS Policy Generator come in handy. You can create IAM Policies that are restricted to a specific resource. Here is an example of the overly permissive policy on the left and the more discreet policy on the right. Both provide full access S3 access to the bucket, but the policy on the right restricts it to a single bucket.

{ |

{ |

The aforementioned JSON can be modified to include a list of resources, or you can simply deny access to a given resource/service and that deny action will automatically take precedence over any allow action. You can add even more granularity depending on whether you want to restrict an entity to read-only access, or want to allow for modification of the access control list (ACL) for the service. There is far more information about AWS Access Management than can be written in a single blog post. The key takeaway is this: give user accounts and roles only those privileges which are essential to perform its intended function.

Test your policies

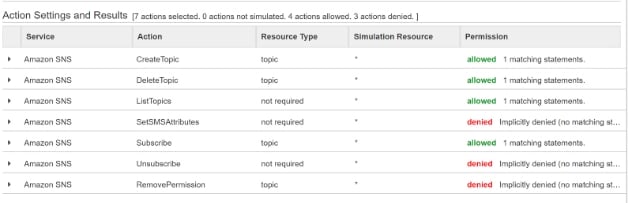

Now you have a pile of massive enigmatic json blobs. How can you tell if the policies you’ve written actually work? There are a couple of ways you can test your AWS policies. The first is to do a manual check by assigning the policy to the resource or user needed and trying it out, or you can use IAM Policy Simulator to test your policy against a number of expected use cases.

The following below is a screenshot of a simulation given an IAM policy that is allowed to create, delete, list and subscribe to SNS topics.

{ |

Consider a separate AWS account

Even after you precisely write and test your new policies there’s always the devops sisyphean task of rolling it them out in production. In a production environment, you aren’t testing against generic use cases; chances are you’re trying to get this policy to work to complete some transaction to get your stack to work. In order to do that, it may be more expedient to create a separate AWS account and give certain user admin access within that environment. That way whatever mess your dev ops team concocts is isolated from your production environment. Plus, your dev ops team can fully experiment with real AWS resources to see how to properly provision the stack and create staging environments that can be readily ported over into production. These resources just need to be removed shortly after being built in order to keep costs low.

Summary

We’ve covered three ways to manage one’s sprawling AWS infrastructure. Namely, one can lock down permissions by using resource specific policies, test those policies using IAM Policy Simulator and simulate different moving parts by creating a staging environment in a separate AWS account to fully replicate. We also covered the use of tools like AWS Policy Generator to help create new IAM policies to help us follow the Principle of Least privilege. Finally, in the name of all that is good and decent, DO NOT make your S3 buckets public unless you are creating a public resource.